Why AI can’t write risk assessments (and why humans still matter)

World Day for Safety and Health at Work 2025

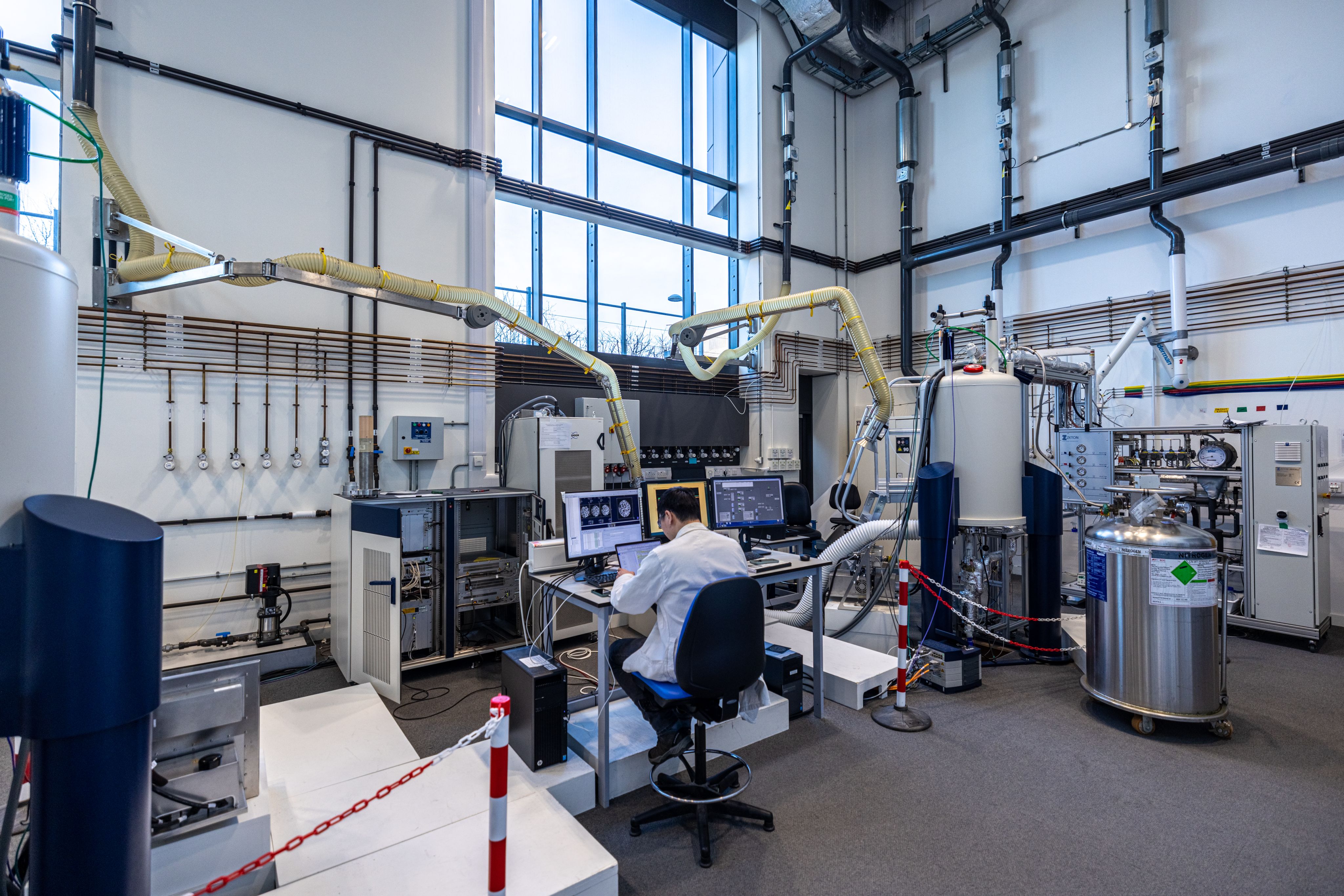

At CEB, safety is never routine. We have what our Departmental Safety Manager calls a ‘broad risk profile’. Under one roof you’ll find pathogens, high-powered lasers, superconducting magnets and a cocktail of corrosive chemicals being used to research solutions into some of the world’s biggest challenges.

“If there’s something hazardous, we probably have it,” said Dr Jessica Fitzgibbon.

In the run-up to World Day for Safety and Health at Work, the Communications Team asked ChatGPT to draft a risk assessment for a simple hypothetical experiment involving dangerous chemical elements. We decided to see how far generative AI could take us in the world of safety to see if human expertise still remains at the heart of keeping people safe.

Hint: It does.

Our experiment revolved around using hydrochloric acid, which is corrosive to living tissue as most of us will remember from school chemistry lessons. It presents a severe risk of harm to the people involved in carrying out the research as one splash could damage skin and other tissues in the human body. It goes without saying that an experiment involving a chemical with the potential to cause serious injury should be rigorously risk-assessed.

So what did ChatGPT think of this?

With no other context provided, the AI tool created a risk assessment for a secondary school environment in the deeply convincing location of ‘School Science Laboratory’. It also suggested disposing of any hydrochloric acid ‘according to school/local chemical waste guidelines.’

It sounded plausible, but revealed a core flaw in using AI for safety-critical documentation.

“That's always the fall down with AI – it's not specific and a risk assessment has to be,” Dr Fitzgibbon said. “When you’re talking about the science we’re doing here, it's work that no one else has done before. An AI-generated risk assessment will not be able to talk about the work we're doing because it's not in its model.”

While the addition of technology to health and safety has huge potential, particularly in making vital safety information easily accessible, the advance of technology also presents the temptation to cut corners.

Dr Fitzgibbon understands this temptation, but takes pains to point out that the benefits of taking health and safety seriously outweigh the time invested in doing it properly.

“It’s important to remember – you're worth it,” Dr Fitzgibbon said. “You're worth the time it takes to do it properly. You're worth the time it takes to plan, to set things up, to get the right equipment, to do it tomorrow instead of squeezing it in at the end of today. You're worth taking that time to make sure that you're looking after yourself.”

And with such a complex safety landscape, CEB’s small safety team faces this challenge daily. Legacy systems add to the load.

“The department has a huge legacy of paper records,” Dr Fitzgibbon added. “Stuff that really should be digitised – it would make things so much easier to access and process.”

The future of health and safety may well involve AI and automation – but it’s still human judgement, experience and care that keep people safe.